Note

Go to the end to download the full example code

Stratified K-fold CV for regression analysis#

This example uses the diabetes data from sklearn datasets to

perform stratified Kfold CV for a regression problem,

# Authors: Shammi More <s.more@fz-juelich.de>

# Federico Raimondo <f.raimondo@fz-juelich.de>

# Leonard Sasse <l.sasse@fz-juelich.de>

# License: AGPL

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.datasets import load_diabetes

from sklearn.model_selection import KFold

from julearn import run_cross_validation

from julearn.utils import configure_logging

from julearn.model_selection import ContinuousStratifiedKFold

Set the logging level to info to see extra information.

configure_logging(level="INFO")

/home/runner/work/julearn/julearn/julearn/utils/logging.py:66: UserWarning: The '__version__' attribute is deprecated and will be removed in MarkupSafe 3.1. Use feature detection, or `importlib.metadata.version("markupsafe")`, instead.

vstring = str(getattr(module, "__version__", None))

2025-07-07 11:52:55,344 - julearn - INFO - ===== Lib Versions =====

2025-07-07 11:52:55,345 - julearn - INFO - numpy: 1.26.4

2025-07-07 11:52:55,345 - julearn - INFO - scipy: 1.15.3

2025-07-07 11:52:55,345 - julearn - INFO - sklearn: 1.5.2

2025-07-07 11:52:55,345 - julearn - INFO - pandas: 2.2.3

2025-07-07 11:52:55,345 - julearn - INFO - julearn: 0.3.5.dev28

2025-07-07 11:52:55,345 - julearn - INFO - ========================

Load the diabetes data from sklearn as a pandas.DataFrame.

features, target = load_diabetes(return_X_y=True, as_frame=True)

Dataset contains ten variables age, sex, body mass index, average blood pressure, and six blood serum measurements (s1-s6) diabetes patients and a quantitative measure of disease progression one year after baseline which is the target we are interested in predicting.

print("Features: \n", features.head())

print("Target: \n", target.describe())

Features:

age sex bmi ... s4 s5 s6

0 0.038076 0.050680 0.061696 ... -0.002592 0.019907 -0.017646

1 -0.001882 -0.044642 -0.051474 ... -0.039493 -0.068332 -0.092204

2 0.085299 0.050680 0.044451 ... -0.002592 0.002861 -0.025930

3 -0.089063 -0.044642 -0.011595 ... 0.034309 0.022688 -0.009362

4 0.005383 -0.044642 -0.036385 ... -0.002592 -0.031988 -0.046641

[5 rows x 10 columns]

Target:

count 442.000000

mean 152.133484

std 77.093005

min 25.000000

25% 87.000000

50% 140.500000

75% 211.500000

max 346.000000

Name: target, dtype: float64

Let’s combine features and target together in one dataframe and create some outliers to see the difference in model performance with and without stratification.

data_df = pd.concat([features, target], axis=1)

# Create outliers for test purpose

new_df = data_df[(data_df.target > 145) & (data_df.target <= 150)]

new_df["target"] = [590, 580, 597, 595, 590, 590, 600]

data_df = pd.concat([data_df, new_df], axis=0)

data_df = data_df.reset_index(drop=True)

# Define X, y

X = ["age", "sex", "bmi", "bp", "s1", "s2", "s3", "s4", "s5", "s6"]

y = "target"

/tmp/tmp3q9rmqjd/733af85652432de70b93a906c9e4e15f11c132fb/examples/00_starting/plot_stratified_kfold_reg.py:51: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

new_df["target"] = [590, 580, 597, 595, 590, 590, 600]

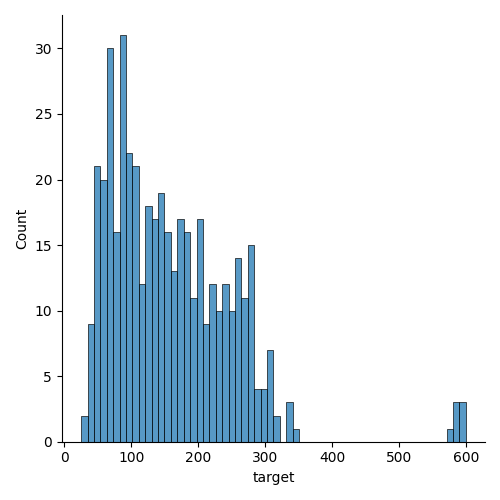

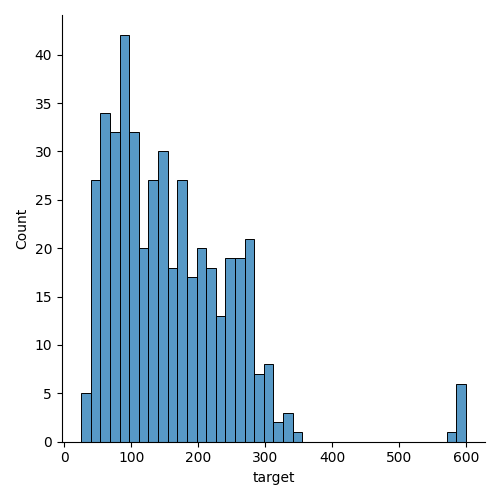

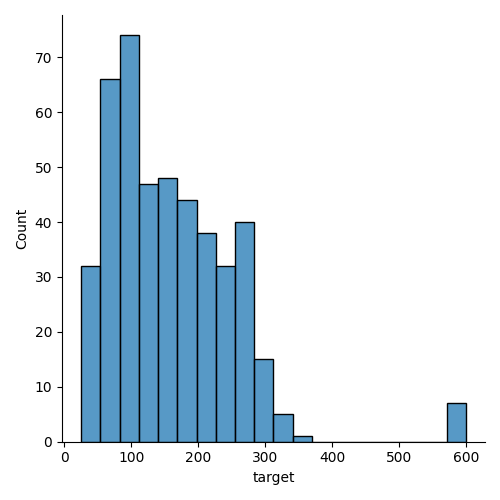

Define number of bins/group for stratification. The idea is that each “bin” will be equally represented in each fold. The number of bins should be chosen such that each bin has a sufficient number of samples so that each fold has more than one sample from each bin. Let’s see a couple of histrograms with different number of bins.

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1119: FutureWarning: use_inf_as_na option is deprecated and will be removed in a future version. Convert inf values to NaN before operating instead.

with pd.option_context('mode.use_inf_as_na', True):

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1119: FutureWarning: use_inf_as_na option is deprecated and will be removed in a future version. Convert inf values to NaN before operating instead.

with pd.option_context('mode.use_inf_as_na', True):

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1119: FutureWarning: use_inf_as_na option is deprecated and will be removed in a future version. Convert inf values to NaN before operating instead.

with pd.option_context('mode.use_inf_as_na', True):

<seaborn.axisgrid.FacetGrid object at 0x7f86fe7566e0>

From the histogram above, we can see that the data is not uniformly distributed. We can see that the data is skewed towards the lower end of the target variable. We can also see that there are some outliers in the data. In any case, even with a low number of splits, some groups will not be represented in each fold. Let’s continue with 40 bins which gives a good granularity.

cv_stratified = ContinuousStratifiedKFold(n_bins=40, n_splits=5, shuffle=False)

Train a linear regression model with stratification on target.

2025-07-07 11:52:55,886 - julearn - INFO - ==== Input Data ====

2025-07-07 11:52:55,886 - julearn - INFO - Using dataframe as input

2025-07-07 11:52:55,887 - julearn - INFO - Features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2025-07-07 11:52:55,887 - julearn - INFO - Target: target

2025-07-07 11:52:55,887 - julearn - INFO - Expanded features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2025-07-07 11:52:55,887 - julearn - INFO - X_types:{}

2025-07-07 11:52:55,887 - julearn - WARNING - The following columns are not defined in X_types: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']. They will be treated as continuous.

/home/runner/work/julearn/julearn/julearn/prepare.py:509: RuntimeWarning: The following columns are not defined in X_types: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']. They will be treated as continuous.

warn_with_log(

2025-07-07 11:52:55,888 - julearn - INFO - ====================

2025-07-07 11:52:55,888 - julearn - INFO -

2025-07-07 11:52:55,888 - julearn - INFO - Adding step zscore that applies to ColumnTypes<types={'continuous'}; pattern=(?:__:type:__continuous)>

2025-07-07 11:52:55,888 - julearn - INFO - Step added

2025-07-07 11:52:55,888 - julearn - INFO - Adding step linreg that applies to ColumnTypes<types={'continuous'}; pattern=(?:__:type:__continuous)>

2025-07-07 11:52:55,888 - julearn - INFO - Step added

2025-07-07 11:52:55,889 - julearn - INFO - = Model Parameters =

2025-07-07 11:52:55,889 - julearn - INFO - ====================

2025-07-07 11:52:55,889 - julearn - INFO -

2025-07-07 11:52:55,889 - julearn - INFO - = Data Information =

2025-07-07 11:52:55,889 - julearn - INFO - Problem type: regression

2025-07-07 11:52:55,889 - julearn - INFO - Number of samples: 449

2025-07-07 11:52:55,889 - julearn - INFO - Number of features: 10

2025-07-07 11:52:55,889 - julearn - INFO - ====================

2025-07-07 11:52:55,889 - julearn - INFO -

2025-07-07 11:52:55,889 - julearn - INFO - Target type: float64

2025-07-07 11:52:55,889 - julearn - INFO - Using outer CV scheme ContinuousStratifiedKFold(method='binning', n_bins=40, n_splits=5,

random_state=None, shuffle=False) (incl. final model)

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/sklearn/model_selection/_split.py:776: UserWarning: The least populated class in y has only 1 members, which is less than n_splits=5.

warnings.warn(

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/sklearn/model_selection/_split.py:776: UserWarning: The least populated class in y has only 1 members, which is less than n_splits=5.

warnings.warn(

Train a linear regression model without stratification on target.

2025-07-07 11:52:55,939 - julearn - INFO - ==== Input Data ====

2025-07-07 11:52:55,939 - julearn - INFO - Using dataframe as input

2025-07-07 11:52:55,939 - julearn - INFO - Features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2025-07-07 11:52:55,939 - julearn - INFO - Target: target

2025-07-07 11:52:55,939 - julearn - INFO - Expanded features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2025-07-07 11:52:55,939 - julearn - INFO - X_types:{}

2025-07-07 11:52:55,939 - julearn - WARNING - The following columns are not defined in X_types: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']. They will be treated as continuous.

/home/runner/work/julearn/julearn/julearn/prepare.py:509: RuntimeWarning: The following columns are not defined in X_types: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']. They will be treated as continuous.

warn_with_log(

2025-07-07 11:52:55,940 - julearn - INFO - ====================

2025-07-07 11:52:55,940 - julearn - INFO -

2025-07-07 11:52:55,940 - julearn - INFO - Adding step zscore that applies to ColumnTypes<types={'continuous'}; pattern=(?:__:type:__continuous)>

2025-07-07 11:52:55,940 - julearn - INFO - Step added

2025-07-07 11:52:55,940 - julearn - INFO - Adding step linreg that applies to ColumnTypes<types={'continuous'}; pattern=(?:__:type:__continuous)>

2025-07-07 11:52:55,940 - julearn - INFO - Step added

2025-07-07 11:52:55,940 - julearn - INFO - = Model Parameters =

2025-07-07 11:52:55,941 - julearn - INFO - ====================

2025-07-07 11:52:55,941 - julearn - INFO -

2025-07-07 11:52:55,941 - julearn - INFO - = Data Information =

2025-07-07 11:52:55,941 - julearn - INFO - Problem type: regression

2025-07-07 11:52:55,941 - julearn - INFO - Number of samples: 449

2025-07-07 11:52:55,941 - julearn - INFO - Number of features: 10

2025-07-07 11:52:55,941 - julearn - INFO - ====================

2025-07-07 11:52:55,941 - julearn - INFO -

2025-07-07 11:52:55,941 - julearn - INFO - Target type: float64

2025-07-07 11:52:55,941 - julearn - INFO - Using outer CV scheme KFold(n_splits=5, random_state=None, shuffle=False) (incl. final model)

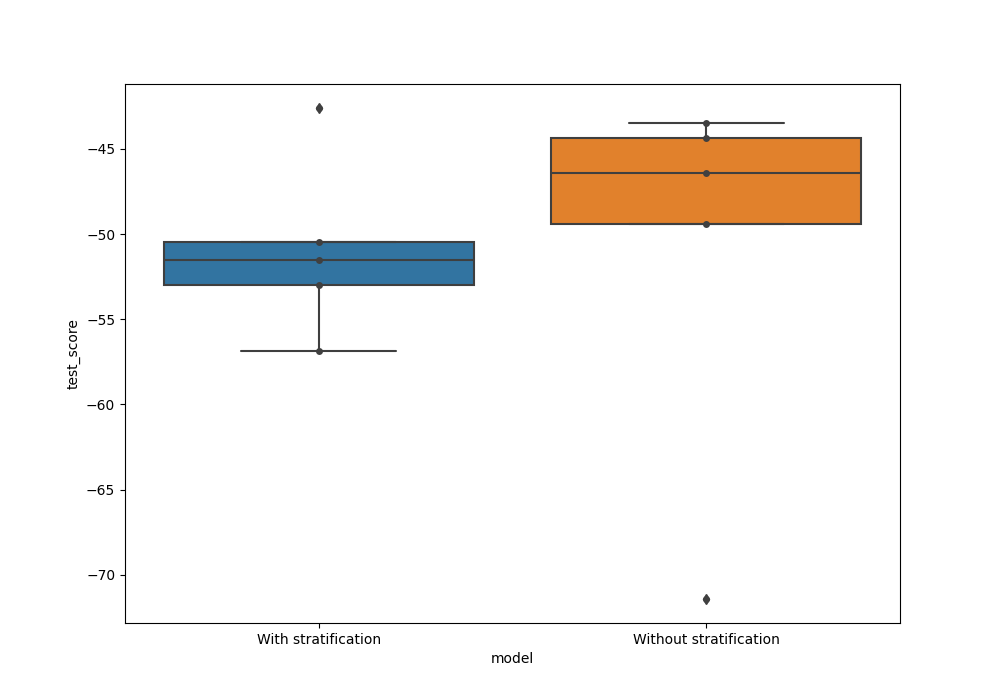

Now we can compare the test score for model trained with and without

stratification. We can combine the two outputs as pandas.DataFrame.

scores_strat["model"] = "With stratification"

scores["model"] = "Without stratification"

df_scores = scores_strat[["test_score", "model"]]

df_scores = pd.concat([df_scores, scores[["test_score", "model"]]])

Plot a boxplot with test scores from both the models. We see here that the test score is higher when CV splits were not stratified.

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1119: FutureWarning: use_inf_as_na option is deprecated and will be removed in a future version. Convert inf values to NaN before operating instead.

with pd.option_context('mode.use_inf_as_na', True):

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1119: FutureWarning: use_inf_as_na option is deprecated and will be removed in a future version. Convert inf values to NaN before operating instead.

with pd.option_context('mode.use_inf_as_na', True):

/opt/hostedtoolcache/Python/3.10.18/x64/lib/python3.10/site-packages/seaborn/_oldcore.py:1075: FutureWarning: When grouping with a length-1 list-like, you will need to pass a length-1 tuple to get_group in a future version of pandas. Pass `(name,)` instead of `name` to silence this warning.

data_subset = grouped_data.get_group(pd_key)

Total running time of the script: (0 minutes 0.806 seconds)