Note

This page is a reference documentation. It only explains the function signature, and not how to use it. Please refer to the What you really need to know section for the big picture.

julearn.run_cross_validation#

- julearn.run_cross_validation(X, y, model, data, X_types=None, problem_type=None, preprocess=None, return_estimator=None, return_inspector=False, return_train_score=False, cv=None, groups=None, scoring=None, pos_labels=None, model_params=None, search_params=None, seed=None, n_jobs=None, verbose=0)#

Run cross validation and score.

- Parameters:

- Xlist of str

The features to use. See Data for details.

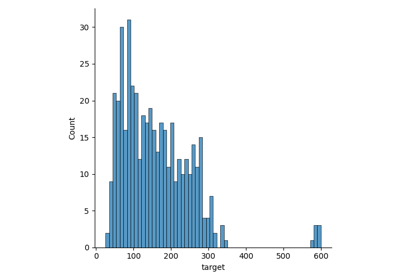

- ystr

The targets to predict. See Data for details.

- modelstr or scikit-learn compatible model.

If string, it will use one of the available models.

- datapandas.DataFrame

DataFrame with the data. See Data for details.

- X_typesdict[str, list of str]

A dictionary containing keys with column type as a str and the columns of this column type as a list of str.

- problem_typestr

The kind of problem to model.

Options are:

“classification”: Perform a classification in which the target (y) has categorical classes (default). The parameter pos_labels can be used to convert a target with multiple_classes into binary.

“regression”. Perform a regression. The target (y) has to be ordinal at least.

- preprocessstr, TransformerLike or list or PipelineCreator | None

Transformer to apply to the features. If string, use one of the available transformers. If list, each element can be a string or scikit-learn compatible transformer. If None (default), no transformation is applied.

See documentation for details.

- return_estimatorstr | None

Return the fitted estimator(s). Options are:

‘final’: Return the estimator fitted on all the data.

‘cv’: Return the all the estimator from each CV split, fitted on the training data.

‘all’: Return all the estimators (final and cv).

- return_inspectorbool

Whether to return the inspector object (default is False)

- return_train_scorebool

Whether to return the training score with the test scores (default is False).

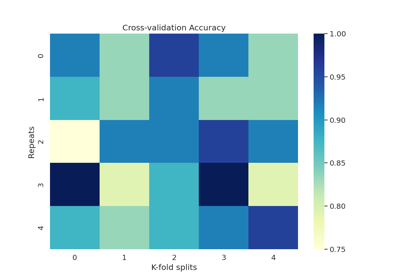

- cvint, str or cross-validation generator | None

Cross-validation splitting strategy to use for model evaluation.

Options are:

None: defaults to 5-fold

int: the number of folds in a (Stratified)KFold

CV Splitter (see scikit-learn documentation on CV)

An iterable yielding (train, test) splits as arrays of indices.

- groupsstr | None

The grouping labels in case a Group CV is used. See Data for details.

- scoringScorerLike, optional

The scoring metric to use. See https://scikit-learn.org/stable/modules/model_evaluation.html for a comprehensive list of options. If None, use the model’s default scorer.

- pos_labelsstr, int, float or list | None

The labels to interpret as positive. If not None, every element from y will be converted to 1 if is equal or in pos_labels and to 0 if not.

- model_paramsdict | None

If not None, this dictionary specifies the model parameters to use

The dictionary can define the following keys:

‘STEP__PARAMETER’: A value (or several) to be used as PARAMETER for STEP in the pipeline. Example: ‘svm__probability’: True will set the parameter ‘probability’ of the ‘svm’ model. If more than option is provided for at least one hyperparameter, a search will be performed.

- search_paramsdict | None

Additional parameters in case Hyperparameter Tuning is performed, with the following keys:

‘kind’: The kind of search algorithm to use, Valid options are:

"grid":GridSearchCV"random":RandomizedSearchCV"bayes":BayesSearchCV"optuna":OptunaSearchCVuser-registered searcher name : see

register_searcher()scikit-learn-compatible searcher

- ‘cv’: If a searcher is going to be used, the cross-validation

splitting strategy to use. Defaults to same CV as for the model evaluation.

- ‘scoring’: If a searcher is going to be used, the scoring metric to

evaluate the performance.

See Hyperparameter Tuning for details.

- seedint | None

If not None, set the random seed before any operation. Useful for reproducibility.

- n_jobsint, optional

Number of jobs to run in parallel. Training the estimator and computing the score are parallelized over the cross-validation splits.

Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors (default None).- verbose: int

Verbosity level of outer cross-validation. Follows scikit-learn/joblib converntions. 0 means no additional information is printed. Larger number generally mean more information is printed. Note: verbosity up to 50 will print into standard error, while larger than 50 will print in standrad output.

- Returns:

- scorespd.DataFrame

The resulting scores (one column for each score specified). Additionally, a ‘fit_time’ column will be added. And, if

return_estimator='all'orreturn_estimator='cv', an ‘estimator’ columns with the corresponding estimators fitted for each CV split.- final_estimatorobject

The final estimator, fitted on all the data (only if

return_estimator='all'orreturn_estimator='final')- inspectorInspector | None

The inspector object (only if

return_inspector=True)

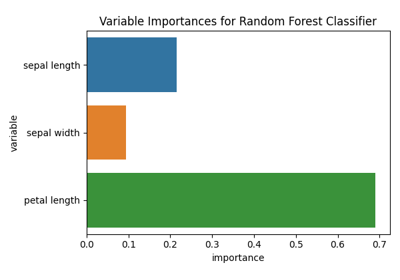

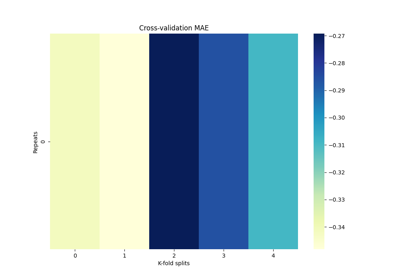

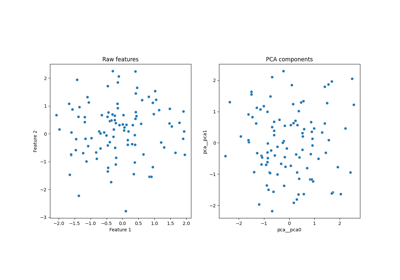

Examples using julearn.run_cross_validation#

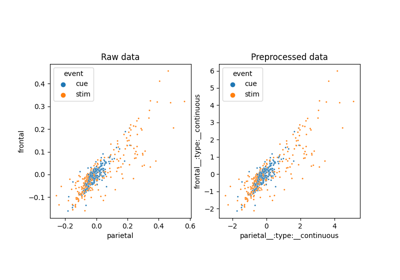

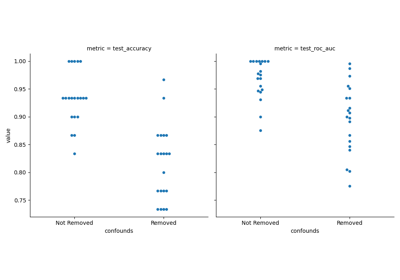

Preprocessing with variance threshold, zscore and PCA