Note

Go to the end to download the full example code

Regression Analysis#

This example uses the ‘diabetes’ data from sklearn datasets and performs a regression analysis using a Ridge Regression model. I will use the Julearn PipelineCreator to create a pipeline with two different PCA steps are used to reduce the dimensionality of the data, each one computed on a different subset of features.

# Authors: Georgios Antonopoulos <g.antonopoulos@fz-juelich.de>

# Kaustubh R. Patil <k.patil@fz-juelich.de>

# Shammi More <s.more@fz-juelich.de>

# Federico Raimondo <f.raimondo@fz-juelich.de>

#

# License: AGPL

import pandas as pd

import seaborn as sns

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_diabetes

from sklearn.metrics import mean_absolute_error

from sklearn.model_selection import train_test_split

from julearn import run_cross_validation

from julearn.utils import configure_logging

from julearn.pipeline import PipelineCreator

from julearn.inspect import preprocess

Set the logging level to info to see extra information

configure_logging(level="INFO")

2023-07-19 12:42:07,639 - julearn - INFO - ===== Lib Versions =====

2023-07-19 12:42:07,639 - julearn - INFO - numpy: 1.25.1

2023-07-19 12:42:07,639 - julearn - INFO - scipy: 1.11.1

2023-07-19 12:42:07,639 - julearn - INFO - sklearn: 1.3.0

2023-07-19 12:42:07,639 - julearn - INFO - pandas: 2.0.3

2023-07-19 12:42:07,640 - julearn - INFO - julearn: 0.3.1.dev1

2023-07-19 12:42:07,640 - julearn - INFO - ========================

load the diabetes data from sklearn as a pandas dataframe

features, target = load_diabetes(return_X_y=True, as_frame=True)

Dataset contains ten variables age, sex, body mass index, average blood pressure, and six blood serum measurements (s1-s6) diabetes patients and a quantitative measure of disease progression one year after baseline which is the target we are interested in predicting.

print("Features: \n", features.head())

print("Target: \n", target.describe())

Features:

age sex bmi ... s4 s5 s6

0 0.038076 0.050680 0.061696 ... -0.002592 0.019907 -0.017646

1 -0.001882 -0.044642 -0.051474 ... -0.039493 -0.068332 -0.092204

2 0.085299 0.050680 0.044451 ... -0.002592 0.002861 -0.025930

3 -0.089063 -0.044642 -0.011595 ... 0.034309 0.022688 -0.009362

4 0.005383 -0.044642 -0.036385 ... -0.002592 -0.031988 -0.046641

[5 rows x 10 columns]

Target:

count 442.000000

mean 152.133484

std 77.093005

min 25.000000

25% 87.000000

50% 140.500000

75% 211.500000

max 346.000000

Name: target, dtype: float64

Let’s combine features and target together in one dataframe and define X and y

Assign types to the features and create feature groups for PCA we will keep 1 component per PCA group

X_types = {

"pca1": ["age", "bmi", "bp"],

"pca2": ["s1", "s2", "s3", "s4", "s5", "s6"],

"categorical": ["sex"],

}

Create a pipeline to process the data and the fit a model. We must specify how each X_type will be used. For example if in the last step we do not specify apply_to=[‘continuous’, ‘categorical’], then the pipeline will not know what to do with the categorical features.

creator = PipelineCreator(problem_type="regression")

creator.add("pca", apply_to="pca1", n_components=1, name="pca_feats1")

creator.add("pca", apply_to="pca2", n_components=1, name="pca_feats2")

creator.add("ridge", apply_to=["continuous", "categorical"])

2023-07-19 12:42:07,657 - julearn - INFO - Adding step pca_feats1 that applies to ColumnTypes<types={'pca1'}; pattern=(?:__:type:__pca1)>

2023-07-19 12:42:07,657 - julearn - INFO - Setting hyperparameter n_components = 1

2023-07-19 12:42:07,658 - julearn - INFO - Step added

2023-07-19 12:42:07,658 - julearn - INFO - Adding step pca_feats2 that applies to ColumnTypes<types={'pca2'}; pattern=(?:__:type:__pca2)>

2023-07-19 12:42:07,658 - julearn - INFO - Setting hyperparameter n_components = 1

2023-07-19 12:42:07,658 - julearn - INFO - Step added

2023-07-19 12:42:07,658 - julearn - INFO - Adding step ridge that applies to ColumnTypes<types={'categorical', 'continuous'}; pattern=(?:__:type:__categorical|__:type:__continuous)>

2023-07-19 12:42:07,658 - julearn - INFO - Step added

<julearn.pipeline.pipeline_creator.PipelineCreator object at 0x7f7f62f0d600>

Split the dataset into train and test

train_diabetes, test_diabetes = train_test_split(data_diabetes, test_size=0.3)

Train a ridge regression model on train dataset and use mean absolute error for scoring/

2023-07-19 12:42:07,659 - julearn - INFO - ==== Input Data ====

2023-07-19 12:42:07,659 - julearn - INFO - Using dataframe as input

2023-07-19 12:42:07,659 - julearn - INFO - Features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2023-07-19 12:42:07,660 - julearn - INFO - Target: target

2023-07-19 12:42:07,660 - julearn - INFO - Expanded features: ['age', 'sex', 'bmi', 'bp', 's1', 's2', 's3', 's4', 's5', 's6']

2023-07-19 12:42:07,660 - julearn - INFO - X_types:{'pca1': ['age', 'bmi', 'bp'], 'pca2': ['s1', 's2', 's3', 's4', 's5', 's6'], 'categorical': ['sex']}

2023-07-19 12:42:07,661 - julearn - INFO - ====================

2023-07-19 12:42:07,661 - julearn - INFO -

2023-07-19 12:42:07,662 - julearn - INFO - = Model Parameters =

2023-07-19 12:42:07,662 - julearn - INFO - ====================

2023-07-19 12:42:07,662 - julearn - INFO -

2023-07-19 12:42:07,662 - julearn - INFO - = Data Information =

2023-07-19 12:42:07,662 - julearn - INFO - Problem type: regression

2023-07-19 12:42:07,662 - julearn - INFO - Number of samples: 309

2023-07-19 12:42:07,663 - julearn - INFO - Number of features: 10

2023-07-19 12:42:07,663 - julearn - INFO - ====================

2023-07-19 12:42:07,663 - julearn - INFO -

2023-07-19 12:42:07,663 - julearn - INFO - Target type: float64

2023-07-19 12:42:07,663 - julearn - INFO - Using outer CV scheme KFold(n_splits=5, random_state=None, shuffle=False)

The scores dataframe has all the values for each CV split.

print(scores.head())

fit_time score_time ... fold cv_mdsum

0 0.016804 0.007410 ... 0 b10eef89b4192178d482d7a1587a248a

1 0.016177 0.007426 ... 1 b10eef89b4192178d482d7a1587a248a

2 0.015846 0.007478 ... 2 b10eef89b4192178d482d7a1587a248a

3 0.015954 0.007679 ... 3 b10eef89b4192178d482d7a1587a248a

4 0.015999 0.007387 ... 4 b10eef89b4192178d482d7a1587a248a

[5 rows x 8 columns]

Mean value of mean absolute error across CV

print(scores["test_score"].mean())

0.3107976743678922

Let’s see how the data looks like after preprocessing We will process the data until the first PCA step We should get the first PCA component for [‘age’, ‘bmi’, ‘bp’] and other features untouched

data_processed1 = preprocess(model, X, data=train_diabetes, until="pca_feats1")

print("Data after preprocessing until PCA step 1")

print(data_processed1.head())

# We will process the data until the second PCA step

# We should now also get one PCA component for ['s1', 's2', 's3', 's4', 's5', 's6']

data_processed2 = preprocess(model, X, data=train_diabetes, until="pca_feats2")

print("Data after preprocessing until PCA step 2")

print(data_processed2.head())

Data after preprocessing until PCA step 1

pca_feats1__pca0 sex s1 ... s4 s5 s6

161 -0.063175 0.050680 0.133274 ... 0.108111 0.075741 0.085907

140 -0.054779 0.050680 -0.030464 ... -0.002592 -0.033246 0.015491

145 -0.098172 -0.044642 -0.033216 ... -0.039493 -0.015999 -0.050783

9 0.032289 -0.044642 -0.012577 ... -0.002592 0.067737 -0.013504

315 0.045025 -0.044642 0.031454 ... -0.039493 -0.010903 -0.001078

[5 rows x 8 columns]

Data after preprocessing until PCA step 2

pca_feats2__pca0 pca_feats1__pca0 sex

161 0.234716 -0.063175 0.050680

140 -0.012141 -0.054779 0.050680

145 -0.078784 -0.098172 -0.044642

9 0.006290 0.032289 -0.044642

315 -0.026190 0.045025 -0.044642

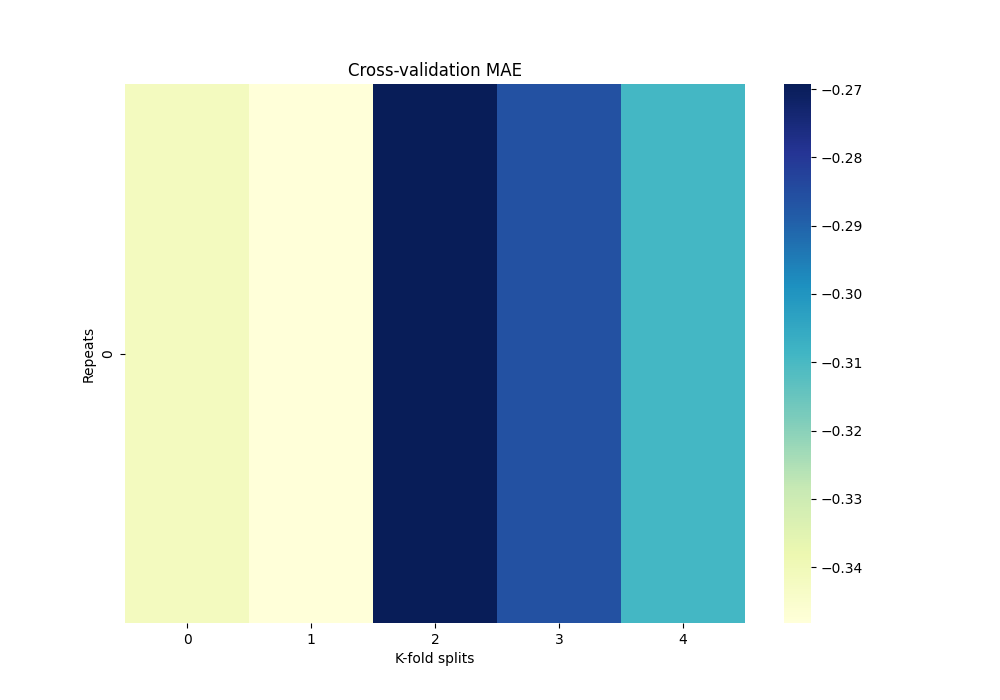

Now we can get the MAE fold and repetition:

df_mae = scores.set_index(["repeat", "fold"])["test_score"].unstack() * -1

df_mae.index.name = "Repeats"

df_mae.columns.name = "K-fold splits"

print(df_mae)

K-fold splits 0 1 2 3 4

Repeats

0 -0.341472 -0.348168 -0.269257 -0.286067 -0.309025

Plot heatmap of mean absolute error (MAE) over all repeats and CV splits

fig, ax = plt.subplots(1, 1, figsize=(10, 7))

sns.heatmap(df_mae, cmap="YlGnBu")

plt.title("Cross-validation MAE")

Text(0.5, 1.0, 'Cross-validation MAE')

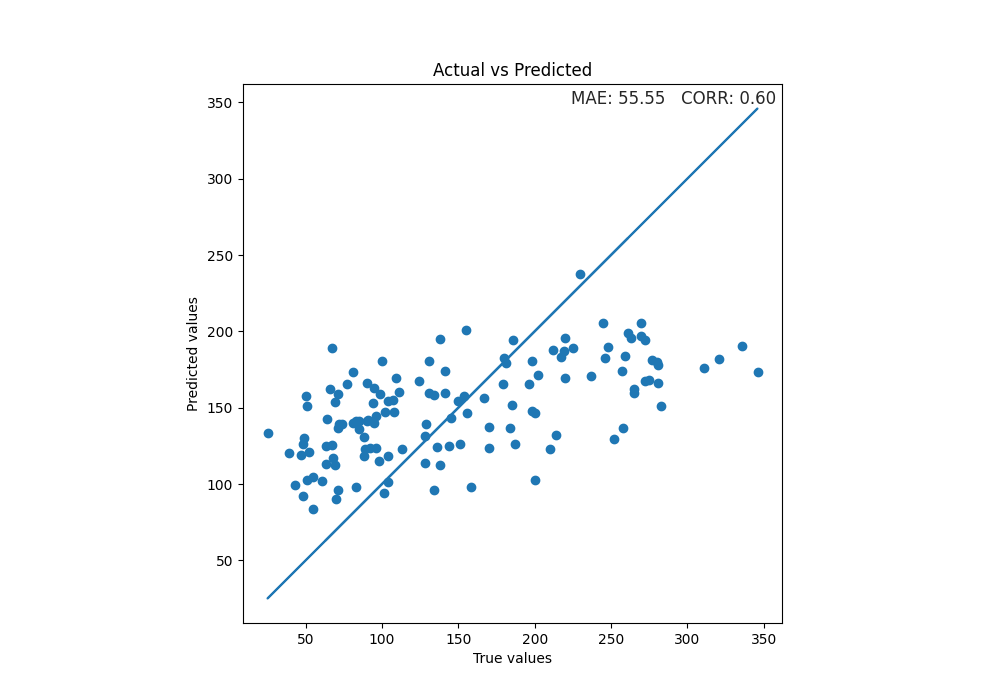

Use the final model to make predictions on test data and plot scatterplot of true values vs predicted values

y_true = test_diabetes[y]

y_pred = model.predict(test_diabetes[X])

mae = format(mean_absolute_error(y_true, y_pred), ".2f")

corr = format(np.corrcoef(y_pred, y_true)[1, 0], ".2f")

fig, ax = plt.subplots(1, 1, figsize=(10, 7))

sns.set_style("darkgrid")

plt.scatter(y_true, y_pred)

plt.plot(y_true, y_true)

xmin, xmax = ax.get_xlim()

ymin, ymax = ax.get_ylim()

text = "MAE: " + str(mae) + " CORR: " + str(corr)

ax.set(xlabel="True values", ylabel="Predicted values")

plt.title("Actual vs Predicted")

plt.text(

xmax - 0.01 * xmax,

ymax - 0.01 * ymax,

text,

verticalalignment="top",

horizontalalignment="right",

fontsize=12,

)

plt.axis("scaled")

(8.95, 362.05, 8.95, 362.05)

Total running time of the script: ( 0 minutes 0.453 seconds)